Short Summary

In the context of Concordia’s AI summer school and the critical AI conference, Unstable Diffusions, we held two related engagement workshops: one with students of the summer school, and one with Canadian civil society actors. Throughout the workshop we enrolled participants in joint futuring exercises using the strategic foresight method Three Horizons and the integration of large-language models (LLMs), such as ChatGPT and HuggingChat. Participants criticized current trajectories surrounding the social life of AI, mapped alternative futures, and produced a series of short videos summarizing their thoughts and findings.

Team

Team Lead

Fenwick McKelvey

Workshop Design & Facilitation

Meaghan Wester

Robert Marinov

Maurice Jones

Technical Assistance

RA Nicholas Dundorf

RA Jade Kafieh

RA Anika Nochasak

Toolkit Design

Meaghan Wester

Output Production & Coordination

Nick Gertler

Sophie Toupin

Website Design

Nick Gertler

Context

In the context of Concordia’s AI summer school and the critical AI conference, Unstable Diffusions, we held two related engagement workshops: one with students of the summer school, and one with Canadian civil society actors. The workshops were situated within Canada’s AI regulatory context, with focus on the Artificial Intelligence and Data Act (AIDA), a part of Bill C-27, the Digital Charter Implementation Act, 2022.

Workshop Methods

Strategic Foresight with Three Horizons

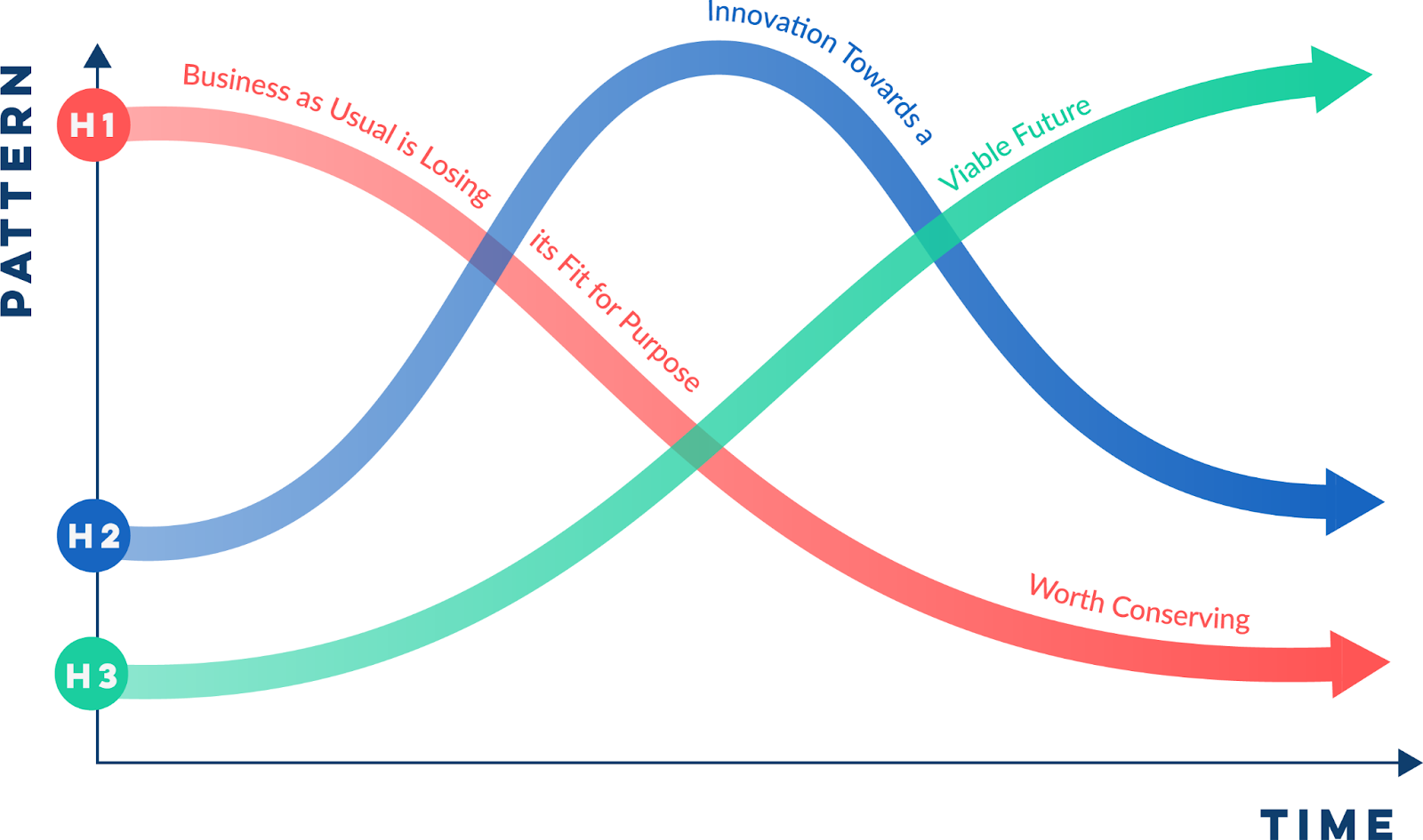

Both workshops deployed the frame of the Three Horizons method rooted in strategic foresight, which originated primarily within corporate and governmental organizations. Strategic foresight and the specific method of Three Horizons are useful tools for coordinating conversation about the future.

The Three Horizons method is geared towards interpolating between Horizon 1, which presents a business-as-usual trajectory, Horizon 3, which imagines an ideal alternative future, and Horizon 2, which ideates on and presents the necessary steps for transitioning between the trajectories of Horizon 1 and 3.

Techno-cultural workshop

While the Three Horizons method provided the overarching frame of the workshops, in its unfolding we also deployed the techno-cultural workshop, which, through the engagement of workshop participants with concrete technologies, was aimed at opening new forms of problematization.

techno-cultural workshop builds on the critical, creative hacker ethos of technological engagement, and the collective practice of the hackathon. We see this as an innovative method for opening up the materiality of computational media and data flows as a way to better grapple with the socio-cultural and political-economic dimensions of datafication.

Coté and Pybus (2016, p. 89)

We deployed the ethos of the techno-cultural workshop through participants’ frequent engagement with a Large Language Model (LLM) chatbot of their choice. Chatbots included OpenAI’s ChatGPT, Google’s Bard, and HuggingChat by Hugging Face. We provided guiding prompts for the different tasks at hand. For instance, when interrogating news headlines we proposed:

[“What are the values and assumptions underlying the headline: ENTER HEADLINE HERE”]Participants were encouraged to play with different forms of prompt engineering throughout the workshop.

Through this engagement participants not only focused on the critiques and visioning practices but were constantly reminded of the technologies we are actually talking about. This then presents a potential for transitioning from abstract discussion surrounding these technologies, as tends to be the case in policy-making discourses, towards constantly being reminded of the materiality and the effects of AI.

Workshop Flow

Part 1: Student Workshop

On Day 1, students were tasked with imagining Horizon 1 (a business-as-usual future) and Horizon 3 (an alternative, desirable future), each projected 15 years into the future. To address Horizon 1 students did a media scan gathering news headlines that represented current issues surrounding AI and its governance. In conversation with a LLM of their choice, students then identified the underlying values that drive the future presented in these news headlines and extrapolated where these values might lead to in the future.

In response to Horizon 1, teams were instructed to draft a short paragraph that describes a desirable future for society and AI based on alternative values that they thought-up together. Following this, students were invited to interrogate their vision through an LLM chatbot of their choice, exploring whether it was able to accurately identify their alternative, desirable values. At the end of Day 1, teams were reconvened in a group discussion touching upon Horizons 1 and 3, as well as their exchanges with the LLM chatbot.

Part 2: Civil Society Workshop

On Day 2, building on students’ Horizons 1 & 3, invited civil society experts were responsible for imagining Horizon 2 – which represents the necessary steps and innovations that could aid in transitioning from Horizon 1 towards Horizon 3. Whereas the student workshop presented a broad investigation of the state of AI and its governance, the expert workshop was situated in addressing 1) concrete legislation in the form of the Canadian Artificial Intelligence and Data Act as a material representation of Horizon 1, 2) the implications of this legislation that civil society experts foresee for the communities they serve, and 3) the potential of the students’ Horizon 3 for becoming a guiding vision for transition.

To begin with, experts were instructed to read and investigate Horizons 1 and 3 as envisioned by students on Day 2. The experts then were tasked with situating Horizon 1 within their specific field of expertise and their communities by identifying 6-7 stakeholders and how they are currently impacted by developments around AI and its governance in Canada. Turning towards Horizon 2, experts were tasked with identifying principles and provisions that should be added or modified in AIDA in order to transform the draft legislation from an extension of Horizon 1 and all the implications this would carry for their communities towards a potential transition towards Horizon 3, as envisioned by the students. Based on these principles and provisions experts were invited to utilize an LLM chatbot of their choice to draft an alternative version of AIDA. The closing discussion focused on questions of imagining the future, the work with LLMs, and concrete next steps civil society experts could deploy.

We'd like you to write an AI Act for Canada, in the style of Canadian legislation. Can you help me with that? In the next prompts we’ll give you provisions that should be included, as well as values that should be incorporated.

ChatGPT

Of course! I'd be happy to help you draft an AI Act for Canada. Please provide me with the provisions and values you would like to include, and I will assist you in creating a suitable legislation in the style of Canadian laws.

Together, the two workshops provided space to collectively think and talk through technological futures in Canada, and possible meaningful points of intervention.

AI ACT

Preamble:

An Act to govern the development, deployment, and use of artificial intelligence systems in Canada, while upholding the values of human rights, democratic participation, equity, diversity, inclusion, the Canadian Charter of Rights and Freedoms, and Indigenous self-determination.

Outputs

During this workshop we co-produced:

- Two mappings of Horizon 1 and 3 trajectories

- A series of student videos

- Created a workshop tool kit

Limitations

1. The workshop requires some minor background knowledge of AI, and may prove less accessible to some. Nonetheless, the use of news headlines and the focus on underlying values helps direct conversations and imaginaries towards more easily-relatable and graspable subject matter, which can also aid in learning about AI during the workshop.

2. LLMs may not always identify what we’re asking for very well, and could require troubleshooting and experimentation with prompts. This is, however, also an opportunity for critical reflection and engagement, as well as learning first-hand about the capacities and limitations of existing LLMs.

3. Knowledge integration and translation between student/community groups (e.g., Day 1 participants) and expert groups (e.g., Day 2 participants) can pose challenges, especially where broad society-level concerns and imaginaries are developed for Horizons 1 and 3, which may be difficult and time-consuming for experts to fully familiarize themselves with and translate to applied situations and actionable steps for a Horizon 2 within the span of a short workshop. Nonetheless, this challenge is part of the learning experience and can be very iterative and generative for expert conversations and knowledge sharing.

Next Steps

Beyond the publication of this report and corresponding toolkit, we are aiming for a series of follow-up workshops implementing the findings and learnings of the May workshop both in terms of content and methodology. These follow-up workshops are aimed at students, researchers, civil society, and members of the broader public and are set to take place in Fall 2023 and Winter/Spring 2024.